Dr. Jaworski’s research interests include consciousness and its relation to the brain, artificial intelligence and its moral and legal implications, the dialogue between science and religion, the role that cognitive biases and critical thinking skills play in a democratic society, and the nature of love and its contribution to human flourishing.

Have questions for Dr. Jaworski regarding his research? Please contact him.

The Problem of Consciousness

The word ‘consciousness’ is used in a variety of ways in philosophy and the sciences. The concept of consciousness that has seized the spotlight over the past two decades is what is sometimes called ‘phenomenal consciousness’.

As you read this you are having experiences or conscious states. What earns them the label ‘conscious’ is that they have phenomenal character; that is, there is something it’s like to be in them: there is something it’s like to see red, which is different from what it’s like to hear middle C, which is different yet again from what it’s like to taste coffee or to have a hangover. The what-it’s-likeness of an experience—its phenomenal character—is what you would try to describe if asked “What is it like to…?,” for example, “What is it like to free fall?” or “What is it like to eat crème brulee?” or “What is it like to see mauve?” In answering these questions, you would try to provide descriptions that enabled your audience to go some of the way toward imagining how it would seem to them if they were to have the experiences for themselves.

The reason phenomenal consciousness has seized the spotlight is that many philosophers and scientists believe that it poses a serious conceptual problem—the so-called ‘hard problem of consciousness.’ The hard problem arises on account of a view that sees the natural world as a vast undifferentiated sea of matter and energy that can be described in principle by physics—either our best current physics or some best future physics. Some parts of this vast sea of matter and energy have phenomenal consciousness and some don’t. You and I, for instance, are conscious, but ostensibly the tables, chairs, rocks, and trees surrounding us are not. When a grape lollipop comes into contact with your tongue, the activation of your nerves is accompanied by an experience: there is something it’s like for you when your tongue comes into contact with the lollipop. When the same lollipop comes into contact with a rock, on the other hand, there is nothing it’s like for the rock to come in contact with it. If the universe is populated by the same matter and energy throughout, then this difference between conscious and nonconscious beings should seem very puzzling.

If you and the rock are made of the same stuff, and that stuff is governed by the same principles or laws throughout the universe—laws described by physics—then it seems that you and the rock should be endowed with the same powers: either you should both be conscious beings or you should both be nonconscious beings. Yet there is a difference between the two of you—and seemingly a very important one. Consciousness makes a huge difference in our lives. We don’t want to have painful conscious experiences when having a tooth drilled, and we mourn the loss of pleasant conscious experiences when we have a cold and can’t savor fine food. Moreover, we generally value our capacity for consciousness. Few of us would voluntarily choose to have our brains damaged in a way that deprived us of conscious capacities, and we often pity people who, on account of brain damage, slip into persistent unconscious states.

Why is it, then, that some things are conscious and some not? Physics doesn’t appear equipped to provide an answer. The descriptions it provides make no mention of what it’s like to smell a rose or to taste coffee; in fact, they don’t even account for the difference between living things and nonliving ones. A body in freefall is described by physics the same way whether it is living or not, conscious or not. The same is true of physical systems of any sort. If we used the vocabulary of physics to provide a complete description of changes in the brain, that description would still tell us nothing about whether those changes were correlated with the smell or a rose, with conscious experiences of some other sort, or with any conscious experiences at all. From the standpoint of physics, there is no difference between the matter and energy belonging to a living, conscious being, and the matter and energy belonging to a nonliving or nonconscious one. Physics uses the same vocabulary to describe rocks, trees, and people. In each case, it describes states of matter and energy without regard to life or consciousness.

The only reason we know of any correlations between physical states and conscious experiences is that we have firsthand knowledge of those experiences, and we have discovered over time that those experiences are correlated with certain kinds of physical occurrences. Take away this first-person knowledge, and physics leaves us at a loss to predict what kinds of conscious experiences would accompany changes in the brain or to predict that changes in the brain would be correlated with conscious experiences at all.

The so-called hard problem of consciousness is the task of explaining why physical occurrences are correlated with conscious experiences: why don’t physical occurrences all occur “in the dark,” so to speak, without any accompanying phenomenal character? Sometimes the hard problem is formulated not as a why-question but as a how-question: how do physical occurrences give rise to conscious experiences? This way of formulating the problem is based on the assumption that at bottom everything in the natural world must be explained by appeal to physical occurrences. If that assumption is true, then conscious experience must also be explained by appeal to physical occurrences; there must be a theory, in other words, which says that if there are such-and-such physical occurrences, then there will be such-and-such conscious experiences.

One of the most useful ways of formulating a conceptual problem is in terms of a number of claims that are jointly inconsistent—that is, a number of claims that together imply a contradiction. Because those claims imply a contradiction, they cannot all be true; at least one of them must be false. Jointly inconsistent claims pose a conceptual problem when it’s not obvious which of the claims is false. When that happens, we’re puzzled. We have difficulty understanding how the world is.

We can formulate the hard problem of consciousness in terms of a number of jointly inconsistent claims:

-

- Conscious experiences are natural phenomena.

- All natural phenomena can be explained in principle by appeal to natural occurrences.

- All natural occurrences can be described and explained exhaustively by physics.

- The descriptions and explanations that physics provides are formulated in a vocabulary that does not distinguish conscious beings from nonconscious ones.

- If the vocabulary of physics does not distinguish conscious beings from nonconscious ones, then physics cannot describe and explain conscious experiences.

Claims (1) – (3) imply that conscious experiences can be exhaustively described and explained by physics. Claims (4) and (5), on the other hand, imply just the opposite: physics cannot describe and explain conscious experiences. Claims (1) – (5) thus imply a contradiction. At least one of the claims must, therefore, be false, but it’s not obvious which. The outline of the problem I’ve given makes each of the claims seem plausible, so we feel puzzled.

The reason it’s useful to formulate a conceptual problem in terms of jointly inconsistent claims is that it points us in the direction of a possible solution. To solve the problem, it is sufficient to reject one of the claims. We can thus examine the claims one at a time and check the reasoning behind each for errors or weaknesses.

What is Intelligence?

The nature of intelligence is vexed issue among psychologists. Broadly speaking, we can understand intelligence as the ability to learn from experience and use knowledge to manipulate the environment in order to adapt to new situations and solve new problems (Sternberg 2017). A quotation attributed (perhaps erroneously) to Stephen Hawking tries to encapsulate the idea: “Intelligence is the ability to adapt to change.”

What is Artificial Intelligence?

‘Artificial intelligence’ refers to the ability of an artificially-constructed system to interact with people and the environment in ways resembling interactions we would call ‘intelligent’ if a human were engaging in them.

A great deal of confusion gets introduced into discussions of AI on account of sloppy terminology. If we’re to give accurate assessments about what AI is and what it can do, we need to have a vocabulary that keeps important distinctions straight. We can start by introducing the idea of a system.

Systems

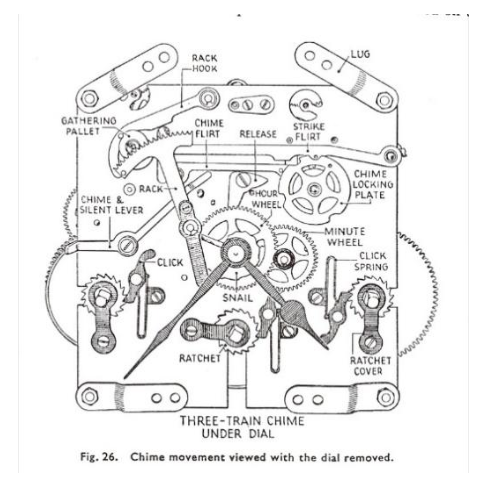

Let’s start with the idea of a system. Let a1, a2,…, an be objects of some sort or other. Suppose that under the right conditions, C, the as interact with each other in ways that reliably produce a certain result. In that case, let us say that the as belong to a system: C(a1, a2,…, an). For example, the pieces of metal in Figure 1 belong to a system (a clock): they interact with each other in ways that reliably tell time. The workers and foodstuffs at a fast food establishment belong to a system: their interactions reliably produces burgers. Likewise, your heart, lungs, arteries, veins, and various other organs belong to a system that reliably delivers oxygenated blood to various parts of yourself, and your nervous system comprises parts whose interactions reliably correlate environment conditions with physiological changes.

We can draw distinctions among various kinds of systems. First, some systems are natural while others are artificial. Your organ systems are natural. They were not intentionally assembled according to the designs of, say, an engineer; they are instead the results of unconscious processes of biological development that occur throughout the natural world. The fast-food establishment and the clock, on the other hand, are artificial systems: they were set up by human designers according to plans aimed at achieving intended results.

Second, a system might be a unified whole, or it might not be. The workers at a fast food establishment belong to a system, but we wouldn’t say that they compose a unified whole. To say that there is a unified whole composed of the workers is to say that there is an object in addition to the workers, namely the object they compose. A whole is not identical to its parts: it is one, they are many. So if the workers compose a whole, there must exist an object in addition to them which they compose. Yet intuitively there is no such object; there are only the workers themselves. They interact with each other and the foodstuffs in a way that reliably produces burgers, but in doing so, they do not bring into existence a new object of which they are parts. They compose nothing at all.

Consider likewise a pair of tango dancers, Alice and Benny. Under the right conditions, they interact in ways that reliably produce a dance. They thus belong to a system. But they don’t compose some additional third individual. If someone were to say that the system (the couple) dances, this would really just be a shorthand way of talking about Alice and Benny—their individual actions and reciprocal interactions. Any agency we might attribute to the system could be described and explained completely in terms of their cooperative activity alone: the power of each to modulate his or her behavior in coordination with the behavior of the other. The same is true of the fast food establishment. Any activity we might attribute to it can be exhaustively explained by appeal to the interactions among the workers and foodstuffs. Once we describe those interactions, the burger-producing activity we attribute to the fast food establishment has been explained in a way that leaves nothing out. A description of the interactions among the workers and foodstuffs, in other words, provides an explanation that is exhaustive. There is nothing—no additional object—distinct from the workers and foodstuffs themselves to which we need to appeal in order to explain what the system does.

If everything a system does can be exhaustively explained by appeal to the objects belonging to it, the principle of Ockham’s razor dictates that there is no individual beyond those objects. Ockham’s razor is a principle for constructing and/or evaluating theories or explanations. It says that when it comes to formulating a theory or explanation, we should not posit any entities beyond those that are necessary for explaining the target phenomenon. If we can explain some phenomenon by appeal to N entities alone, we should not opt for an explanation that appeals to N+1 entities. If every activity that can be attributed to the fast food establishment can be exhaustively explained by appeal to the workers and foodstuffs alone, then we should not posit an individual beyond the workers and foodstuffs to explain what the fast food establishment does. It is a corollary of Ockham’s razor as I’ve just described it that if there are two explanations, E1 and E2, of a target phenomenon, which are otherwise indistinguishable in their explanatory power, then we should favor the explanation that posits fewer entities: if E1 posits N entities and E2 posits N+1 entities, then we should prefer E1.

In cases like the dancing couple and the fast food establishment, talk of the system and its activities is really shorthand for a longer description of the objects that belong to the system and their activities. Saying, “The system produces burgers,” is simply a shorthand way of saying, “The workers are doing such-and-such with the foodstuffs.” In general, in these kinds of cases, saying, “The system C(a1, a2,…, an) does X,” is a more efficient way of saying, “Under conditions C, the as do such-and-such individually and interact with each other in such-and-such ways.” Speaking of “the system” in these cases is really just a linguistic convenience—a more efficient way of describing the collective activities of the as. To insist in these cases that the expression “the system” refers to an individual distinct from the as would be an ontological extravagance—a commitment that would go beyond what was necessary to account completely for what the system did.

Belonging to a system is thus different from composing an individual. In cases like the fast food establishment and the dancing couple, the objects belonging to the system do not compose an individual. Speaking of the system in these cases does not posit an object in addition to the objects belonging to the system; there is no individual distinct from the objects belonging to the system which those objects compose, and to speak of the system’s doings is really just a shorthand way of referring to what the objects belonging to the system collectively do. The fast-food establishment and the dancing couple are thus mere systems not individuals.

The case of a mere system is different, however, from the case of, say, an organism such as a human being. The physical objects composing you belong to a system insofar as they are spatially and causally related to each other in ways that reliably produce certain results (homeostasis, growth, development, perception, movement, thought, and so on). But they do more than belong to a system: they actually compose an additional individual, namely you. Alice and Benny do not compose an individual in addition to themselves, neither do the fast-food workers. You, however, are something distinct from the physical things composing you.

What distinguishes composite individuals like you from mere systems is that composite individuals have powers that the physical objects composing them do not have. You can do things that your physical components, taken individually or collectively, cannot do. It is you, for instance, not them, that thinks, that feels, that perceives, and so on. Attributing these activities to you, not them, implies that statements like “You think” and “You feel” are not shorthand ways of referring to the collective operations of your components. To describe the spatial and causal relations among those components falls short of describing your thinking and feeling. Thinking and feeling are activities that you—the whole composite individual—engage in.

The foregoing considerations yield a fourfold classification of systems which we can represent using a Punnett square (Figure 2).

| Natural | Artificial | |||

| A unified whole | A human being | ? | ||

| Not a unified whole (a “mere system”) | Weather systems, natural selection | Dancing couple, fast food establishment, clock | ||

| Figure 2. Four types of systems | ||||

The mere systems we have considered so far (the fast food establishment and the dancing couple) are both artificial systems. Both result from agents who act to achieve intended goals. Something analogous is true of the clock. The spatial and causal arrangement of the pieces of metal in Figure 1 was set up by human designers according to plans aimed at achieving intended results. Moreover, once we describe the interactions among those pieces of metal, we have succeeded in giving an exhaustive explanation of what the clock does. The clock is thus an artificial system—a mere system, not an individual.

Some natural systems are also mere systems. Consider a weather system. Various atoms and molecules in the atmosphere interact in complex ways with energy from the sun and the planet’s surface produces precipitation and perceptible variations in atmospheric temperature and pressure. If we were able to describe the complex interactions among these things, we would have provided an exhaustive explanation of the weather. A weather system is not an individual in its own right, it is a mere system.

Think likewise about natural selection. Organisms interact with each other and the environment in ways that result in some of them having better odds for survival and successful reproduction than others. Those organisms and their environments thus belong to a system—a mere system, for once we describe the interactions among the organisms and their environments, we have an exhaustive account of how organisms with these or those traits managed to survive and reproduce with varying degrees of success.

We have considered examples of natural individuals, and of mere systems both natural and artificial. What about artificial individuals—the kinds of things that would occupy the upper right-hand quadrant of the Punnett square? Are there such things? Could there be? Trying to answer this question is, in fact, one of the main goals of this discussion. We’ll start by considering in the next section what kind of thing this would be.

Model Systems

You are a unified whole—an individual in your own right. You engage in activities that the physical objects composing you do not, but you engage in these activities by operating some of your physical components in coordinated ways. You reach for a bottle of beer, for instance, by coordinating the operation of various muscles and nerves, and you bring food from your mouth to your stomach by the coordinated operation of muscles and nerves in your esophagus. The operations of your muscles and nerves, when coordinated in the right way, compose your acts of reaching and swallowing, respectively. In some cases, the coordination of your parts is something you impose consciously and intentionally as in reaching for the bottle; in other cases, it is neither conscious nor intentional as in digesting food or increasing blood flow to the legs in response to something fearful. In whatever way the coordinated operation of your parts accomplished, whether consciously and intentionally or not, your activities are composed of the coordinated operations of your parts.

We could in principle construct a system that mimicked your activities. Imagine that we removed the muscles and nerves you operate when reaching, and reassembled them so that they were capable of causally interacting with each other in ways that were indistinguishable from the ways they interact in you when you reach for a bottle. Imagine, moreover, that we connected them to electrodes activated by a control mechanism that stimulated them so that their interactions reliably resulted in the kinds of movements you produced when reaching. The result would be a system that moved in a way that replicated your movements when you engage in reaching.

Someone who observed the system moving in this way might be tempted to describe it by saying that it was reaching in fact. But this would be an inaccurate description. Reaching is an intentional action. It requires a unified agent who forms an intention to achieve a goal (the goal of, say, grasping a bottle), and who coordinates the operation of its parts to achieve that goal. The system of extracted muscles and nerves, however, is not a unified agent. It is a mere system whose operations can be exhaustively described by the interactions among the muscles, nerves, and electrodes. The muscles, nerves, and electrodes do not compose a whole that forms intentions, and so the system cannot engage in an act of reaching. What the muscles, nerves, and electrodes can do is interact in ways that mimic or simulate an act of reaching.

Constructing a system of this sort might be helpful for a number of purposes. It might be helpful, for instance, in studying how you reach. It might provide a helpful model, in other words, for understanding what’s involved in the activity of reaching. But the system itself would not engage in reaching. To say that the system of muscles, nerves, and electrodes reached would be merely a shorthand way of describing their collective operation—their individual actions and the interactions among them.

What I’ve said about activities like reaching applies also to activities like thinking, feeling, and perceiving. These too are activities in which you engage by coordinating the operations of your parts. Just as reaching is not a random series of neural firing and muscular contractions, the same is true of thinking, feeling, and perceiving. Each is an activity in which we engage by coordinating the ways that our components operate. And just as we could in principle construct a model that simulates reaching, cognitive scientists, neuroscientists, artificial intelligence researchers, and others sometimes look to construct models of human thinking, feeling, or perceiving by arranging physical objects (or more typically by using software to simulate arrangements of physical objects) whose collective operations mimic the coordinated ways in which parts of the human nervous system operate when people think, feel, or perceive. Their work provides important insights into how we engage in these activities, but as with the system of muscles, nerves, and electrodes, it would be incorrect to say that these systems themselves are thinkers, feelers, or perceivers. They are instead models of what thinkers, feelers, or perceivers do—models of the coordinated interactions among their parts when they engage in thinking, feeling, or perceiving.

Imagine, however, that we could extend the models that cognitive scientists, neuroscientists, and artificial intelligence researchers use. Imagine that we could spatially and causally arrange numerous atoms and molecules in exactly the ways that the atoms and molecules composing you are spatially and casually arranged. The result would be a replica of you—one that would ostensibly have many of the powers you have. I say “many of the powers” not “all of the powers” because attributing some mental activities and powers to an individual requires that certain historical conditions be satisfied. Memory is an example. Remembering a past event requires that the past event actually has occurred. You can remember what you did at your second-grade birthday party because you actually did it. Your replica, however, did not. It did not attend its second-grade birthday party (nor did it attend yours), and so it cannot remember what it did there—not even if the components of its nervous system were interacting in precisely the ways the components of your nervous system interact when you remember what you did at your second-grade birthday party. It would thus be incorrect to say that your replica could remember that event. What would be correct to say is instead that the components of your replica’s nervous system were interacting in ways that exactly resemble the ways the components of your nervous system interact when you remember what you did at your second-grade birthday party; that those interactions mimic or simulate or provide a reliable replica or model of your memory; they nevertheless do not compose an act of remembering, nor does your replica actually remember that event. There’s more to be said about this topic, and I will return to it later, but for the time being let us consider what you and your replica have in common, and what distinguishes the two of you from mere systems of physical objects.

Your replica would be capable of homeostasis, growth, development, and movement, just as you are, and it would be capable of thinking, feeling, and perceiving albeit with some qualifications, as I’ve indicated. The implication of this seems to be that your replica would be a living individual, just as you are, for it would have the powers and engage in the activities that define living individuals. It would simply be a living individual that had been artificially produced. It would be an example of the kind of system that would occupy the upper right-hand quadrant of Figure 2: a unified whole; not a mere system, but an individual in its own right. It would not be a natural system, however, but an artificial one that had been set up by human designers according to plans aimed at achieving intended results.

Would your replica be human? That depends on how ‘human’ is defined. If being human includes a historical condition—if, for instance, humans must, by definition, be biological descendants of individuals who evolved by natural selection—then your replica would not be human. It would be a human replica if you like. If, on the other hand, being human includes no such condition, then likely your replica would be human; it would just be an artificially-produced human.

What we decide to call your replica, however, is less important than what it is, what it can do, and what distinguishes you and it, on the one hand, from systems like the one to which the muscles, nerves, and electrodes belong, on the other. Unlike the latter system, you and your replica are individuals distinct from the physical things composing you. You can do things that cannot be exhaustively explained simply by describing the interactions among the physical things composing you.